Can you trust what you see and hear online anymore? Deepfake technology makes this question even more important. It uses artificial intelligence to create synthetic media, blending reality with fiction.

Deepfake technology has become commonplace online. From celebrities speaking to politicians, these AI creations are changing the way we see and understand information online.

Deepfakes have evolved quickly since 2017. What began as simple face-swapping videos has grown into advanced synthetic media. Now, deepfakes are used in many fields, showing both their benefits and dangers.

Exploring deepfakes, we see their wide-ranging impact. They can be fun, like putting Nicolas Cage in every movie. But they also have serious risks, like identity theft and spreading false information.

What Are Deepfakes: Understanding the Technology

Deepfakes are fake videos made by computers. They mix images to show things that didn’t really happen. This tech uses advanced AI to make people look like they’re saying or doing things they’re not.

The term “deepfake” started in late 2017. Since then, it has grown to include many uses beyond just swapping faces.

Definition and Basic Concepts

Deepfake algorithms swap a person’s image in a video with another’s. These fake videos are very hard to tell from real ones. They can include voice changes, video filters, or completely made-up scenes.

Evolution of Synthetic Media

The rise of deepfakes has been remarkable. thanks to AI and machine learning. What started as simple tricks has become very realistic. In 2019, the number of deepfake videos online doubled from that of 2018.

Most of these videos were pornographic and featured mostly women.

The Role of Artificial Intelligence

Deepfakes depend a lot on AI and machine learning. These systems use special algorithms to change videos. They can create fake data and also check and improve it.

This tech has good and bad sides. It helps with things like self-driving cars but can also spread false information.

Deepfakes use Generative Adversarial Networks (GANs).

Machine learning algorithms analyze and manipulate facial features.

AI components generate and refine fake data.

The Science Behind Deepfake Creation

Deepfake technology uses complex machine learning algorithms. It creates realistic synthetic media. This field combines artificial intelligence with image and video processing.

Generative Adversarial Networks (GANs)

GANs are key to deepfake algorithms. They have two parts: a generator and a discriminator. The generator makes fake images, and the discriminator tries to spot them.

Autoencoder Technology

Autoencoders help in face swapping in deepfakes. They look at facial features and expressions. Then, they create convincing synthetic faces for videos.

Machine Learning Algorithms

Advanced machine learning makes deepfakes possible. Neural networks learn from huge datasets. They make faces and voices look real.

As AI gets better, deepfakes will become even more realistic. Experts say this will happen in 2 to 10 years.

Deepfake technology is both a worry and a creative tool. It’s used in entertainment and education.

Common examples of deepfake technology.

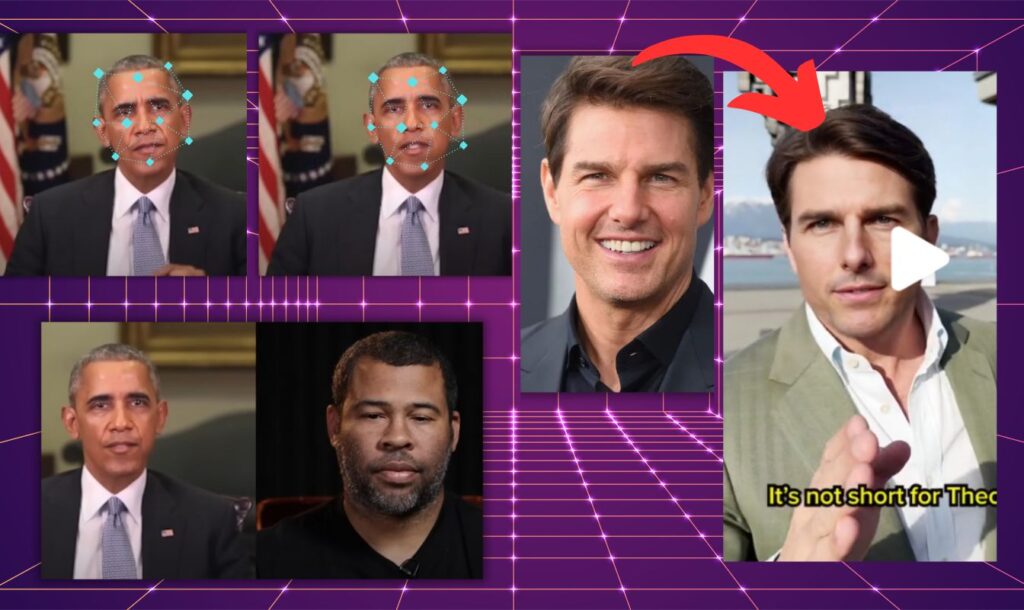

Deepfake technology has made huge leaps in recent years. It is used to manipulate celebrities and politicians, making digital creations more realistic. Let’s take a look at some key examples in different fields.

Celebrity and Politician Fake

Fake videos of celebrities are extremely common on the internet. Videos of a Tom Cruise lookalike on TikTok show the power of this technology. In politics, a fake video of Barack Obama by Jordan Peele shows how easy it is to fake public figures.

Entertainment Industry Applications

The entertainment world loves deepfake tech. Big franchises like Star Wars use it to bring back characters or make actors look younger. It’s a way to improve movies and TV shows.

Social Media Trends

Deepfakes are big on social media. People make fun videos by swapping faces in movie scenes. But it also worries people about fake news spreading. The fun and the fake are getting mixed up as more people use this technology.

Over 20,000 AI-generated CSAM images were identified in one month on a dark web forum.

Cybercrimes fueled by AI are expected to cost $8 trillion this year.

Voice cloning scams using audio deepfakes have caused financial losses.

Deepfakes are used to impersonate content creators for fraudulent schemes.

As deepfake tech gets better, we need to know more about it. Some uses are fun or helpful, but others are risky. They can harm privacy, security, and trust in digital media.

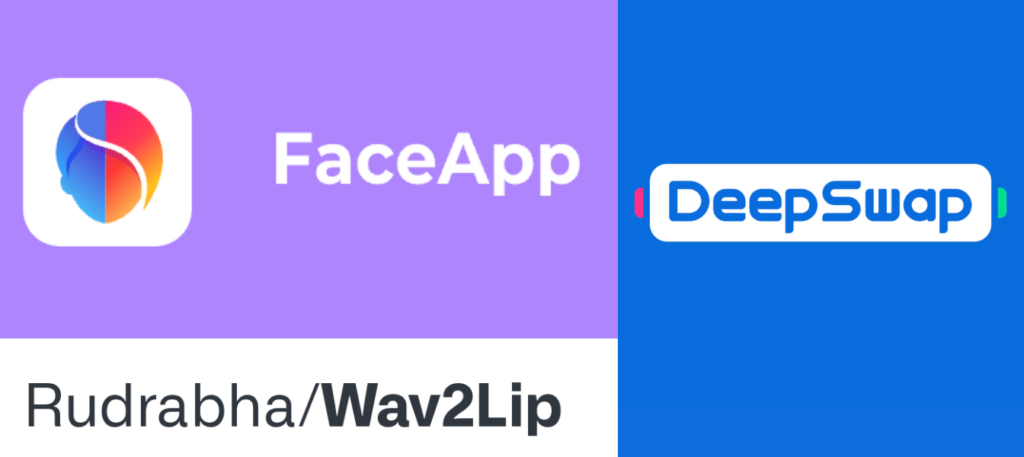

Tools and Software for Creating Deepfakes

The world of deepfake applications is growing fast. Tools like FaceSwap, Face2Face, and DeepFaceLab are now available. They let users change facial expressions and swap faces in videos.

Creating high-quality deepfakes needs powerful hardware and lots of data. But simpler versions can be made with apps and websites. For example, Reface and Deepfakes Web make face swapping easy. Voice cloning tools also let users change or mimic voices.

These tools are easy to use, but they also raise concerns. As deepfake tech gets better, finding ways to spot fake media becomes more important. Groups are working on ways to check if media is real, such as digital watermarks and blockchain.

Important

We are not responsible for any actions related to the use of these applications in an illegal manner. our goal is educational and cultural.

Potential Risks and Threats

Deepfake risks are growing fast. In 2022, two-thirds of cybersecurity experts said they faced malicious deepfakes. This is a 13% increase from the year before. It shows we need to be more aware and protect ourselves.

Identity Theft and Fraud

Deepfakes can lead to identity theft. Criminals make fake identities that look real, causing financial harm. For example, scammers used deepfake videos to steal $25 million from a Hong Kong bank.

Political Manipulation

Deepfakes threaten political stability. They can spread false information and change public opinion. In the 2020 U.S. election, there were worries about deepfake videos affecting voters. This shows how deepfakes can manipulate politics.

Social Engineering Attacks

Deepfakes make social engineering attacks more complex. In one case, scammers tricked a CEO into giving €220,000 to a fake supplier using an audio deepfake. This shows how deepfakes can trick people and get past security.

To fight these threats, companies must teach employees, use strong checks, and get AI tools. We need a strong plan to protect everyone from deepfake risks.

Detection Methods and Prevention

As AI-generated content gets better, finding deepfakes is more important. Visual checks look for odd lip-syncing, blinking, and facial movements. But these methods can’t catch everything.

AI-Powered Detection Tools

AI tools lead the fight against deepfakes. They use learning algorithms to find signs of tampering. Some compare content to known deepfakes. Others spot tiny flaws that humans miss.

Authentication Systems

Authenticating media is key. Digital signatures and blockchain keep content safe from tampering. These methods make it tough for deepfakes to look real. Adding biometrics for extra security helps, too.

Even with better detection, the battle against deepfakes goes on. Creators keep making their fake media better. This means we need to keep improving how we spot and stop deepfakes.

Legal and Ethical Implications

Deepfake technology is growing fast, bringing big risks and ethical worries. Most deepfakes, 96%, are pornographic videos. Millions see these videos, often without the people’s consent.

Deepfakes can cause legal problems beyond just personal harm. Scammers use deepfakes to pretend to be company leaders. They have lost $25 million. This shows that we need strong laws to fight these crimes.

Ethical issues also come up with deepfakes in politics and business. They could change election results if used to trick voters. Some companies use them for marketing. They make videos that seem real to sell products online.

To tackle these problems, experts suggest:

Setting strict rules for using someone’s image.

Being open about deepfake content

Creating ways to check whether digital content is real.

Teaching people about deepfake technology and its dangers.

Since laws are slow to catch up, the industry and others must step in. They work together to set rules and be clear about how deepfakes are used. This helps protect against the misuse of this powerful technology.

Future Developments in Deepfake Technology

Deepfake technology is growing fast. Experts say we’ll see a big jump in deepfake videos and voices. From 500,000 in 2023 to 8 million by 2025. This growth means we need to know more about deepfakes, as only a third of people knew about them in 2022.

Emerging Applications

Deepfakes are not just for fun anymore. Researchers at NSAIL are looking into using them to fight terrorism. Their project, TREAD, aims to use deepfakes to reduce conflicts. But there are risks, like fake evidence and new ways of harassment.

Technological Advancements

Creating deepfakes is getting better. Making fake media that looks real is a big challenge. This has led to calls for rules and a way to check the risks and benefits of deepfakes.

Industry Impact

Deepfakes are changing the industry a lot. In 2023, actors went on strike because of AI and deepfakes. Fake news cost the world $78 billion in 2020. The European Union started rules on AI in businesses in June 2023. This shows a move to tackle AI risks in different fields.

Conclusion

Deepfakes are now a major event in synthetic media. They can inspire creativity but also pose significant risks. The rise in deepfakes, particularly in finance, shows that we need better ways to detect and prevent them.

The impact of deepfakes goes beyond finance. They are now affecting the legal sector and businesses in many areas. With the rapid advancement of artificial intelligence, it is important for businesses to keep up. We must use synthetic media responsibly and with caution. To do this, we must learn about deepfakes.

FAQs

What are deepfakes?

Deepfakes are fake videos made with AI. They look real because of advanced technology. These videos can be of voices, filters, or completely made-up scenes.

How are deepfakes created?

Deepfakes use AI, like Generative Adversarial Networks (GANs). These tools learn from a lot of data. They make fake videos that look real by mimicking how our brains work.

What are the potential risks associated with deepfakes?

Deepfakes can steal identities and cause financial fraud. They can also spread false information and influence elections. There is a big worry about privacy and trust in digital media.

How can deepfakes be detected?

To spot deepfakes, look for odd lip-syncing or facial movements. AI tools are being made to find these fakes. They check for inconsistencies and use deep learning.

What are the legal and ethical implications of deepfakes?

Deepfakes raise many legal and ethical questions. There’s no clear law yet. Concerns include privacy, consent, and trust in digital media.

- TikTok Under the Microscope: What Years of Investigation Revealed

- Introducing Queel: A New Professional Networking Platform

- Low Cost Drone with Camera: How to Get Pro-Quality Footage for Less

- How to Shutdown Windows 11:An Accessible Complete Guide with Step-by-Step Instructions

- Starlink was made available at no cost to those affected by Hurricane Helene though it isn’t completely free

- AMD vs. Intel: Has AMD Finally Overtaken the CPU Giant?